Simple SSAO implementation (XNA 4.0)

Why SSAO?

Normally, games have baked indirect lighting and only local lighting models for dynamic objects and dynamic lights. Occlusion for dynamic lights is evaluated with shadow maps, but occlusion of indirect lighting is mostly absent. When one looks at the real world, illumination of an object standing on a flat ground is not clean, but partially darker at some places around it. Leaving alone that surfaces are nearly never completely flat at all - most materials are rough and bumpy.Screen Space Ambient Occlusion therefore is a good thing for various reasons: First, it enhances the overall image quality of a video game because it offers a more believable lighting model. Second, it's easy to understand. Third, it can be implemented as a simple pixel shader in deferred renderers, so no need to change your whole architecture. And then, the effect can be extended to not only calculate occlusion but also indirect lighting (see my follow up post).

Here is a step by step recipe to implement SSAO.

How does it work?

My environment is given: XNA 4.0 und HLSL, because I recently did a project with that and I am currently used to it.

Not much needed besides that: The depth buffer, the color map, the normal map and a noise map.

Ah, before I forget: My implementation is based on various blog posts from the internet, for example this one.

Roughly spoken, for every pixel of the output image, we take a look at the depth value of some surrounding pixels.

We chose those points by randomly sampling a hemisphere around a pixel's position.

When they are closer to the camera, we can assume that the source pixel is behind them.

Which means they occlude the source pixel's surface, depending on the distance between each other.

Step 1: Gather samples randomly

Of course it depends on your tech stack how exactly you need to implement this.

I just reimplemented a deferred rendering engine from a random XNA tutorial and pipe my maps as textures into a post effect shader.

float depth = tex2D(depthSampler,input.TexCoord).r;

float3 color = tex2D(colorSampler,input.TexCoord).rgb;

float3 noise = tex2D(noiseSampler,input.TexCoord).rgb;

float3 norm = tex2D(normalSampler,input.TexCoord).rgb;

Now we need some randomness. When the sample count is known upfront, they can be precalculated with a distribution of your choice.

float3 pSphere[16] = {float3(0.53812504, 0.18565957, -0.43192),float3(0.13790712, 0.24864247, 0.44301823),float3(0.33715037, 0.56794053, -0.005789503),float3(-0.6999805, -0.04511441, -0.0019965635),float3(0.06896307, -0.15983082, -0.85477847),float3(0.056099437, 0.006954967, -0.1843352),float3(-0.014653638, 0.14027752, 0.0762037),float3(0.010019933, -0.1924225, -0.034443386),float3(-0.35775623, -0.5301969, -0.43581226),float3(-0.3169221, 0.106360726, 0.015860917),float3(0.010350345, -0.58698344, 0.0046293875),float3(-0.08972908, -0.49408212, 0.3287904),float3(0.7119986, -0.0154690035, -0.09183723),float3(-0.053382345, 0.059675813, -0.5411899),float3(0.035267662, -0.063188605, 0.54602677),float3(-0.47761092, 0.2847911, -0.0271716)};

Next, we are going to generate normals that we need later for reflection.

This is where the noise map comes into action.

For every pixel of the output image, we have a random normal. It can be offset as well.

float3 fres = normalize(noise*2) - float3(1.0, 1.0, 1.0);

float3 ep = float3(input.TexCoord,currentPixelDepth);

Then, my source has the sample radius depend on the depth - the bigger the depth, the bigger the radius to sample. This is a good idea, because

for things far away, distance in screen space may result in large distance in world space.

For rad my source uses a value of 0.006, which seems to be arbitrarily chosen.

float radD = rad/currentPixelDepth;

Step 2: The loop

Now, for every pixel, we need to do some steps.

Before the loop is done, we define our accumulator variables.

float bl = 0.0f;

float occluderDepth, depthDifference, normDiff;

How many iterations the loop should have can be decided by you. The more the better regarding the quality of the effect.

For more than 16 samples, you will need a bigger random vector than I do.

for(int i=0; i<9;++i)

{

// Chose a vector from the hemisphere and reflect with noise vector

float3 ray = radD*reflect(pSphere[i],fres);

// When the ray leaves the hemisphere, its direction is changed

float3 se = ep + sign(dot(ray,norm) )*ray;

// Get depth value of occluder

float3 occluderFragment = tex2D(depthSampler,se.xy);

// Get normal of the occluder

float3 occNorm = tex2D(normalSampler,se.xy).rgb;

// When the difference is negative, the occluder is behind the current source pixel surface

depthDifference = currentPixelDepth-occluderFragment.r;

// Dot product as a weight

normDiff = (1.0-dot(occNorm,norm));

// the falloff equation, starts at falloff and is kind of 1/x^2 falling

bl += step(falloff,depthDifference)*normDiff*(1.0-smoothstep(falloff,strength,depthDifference));

}

The last step is important to have a smooth falloff. My falloff is 0.000002.

Step 3: Finish

After the loop, the occlusion value gets multiplied with an intensity factor and divided by the sample count-

strength is 0.07 here, totStrength is 1.38.

You can just return a single float, but take a look at my next post why I am already using a vector.

float ao = 1.0-totStrength*bl*invSamples;

return float4(ao,ao,ao,ao);

That was easy

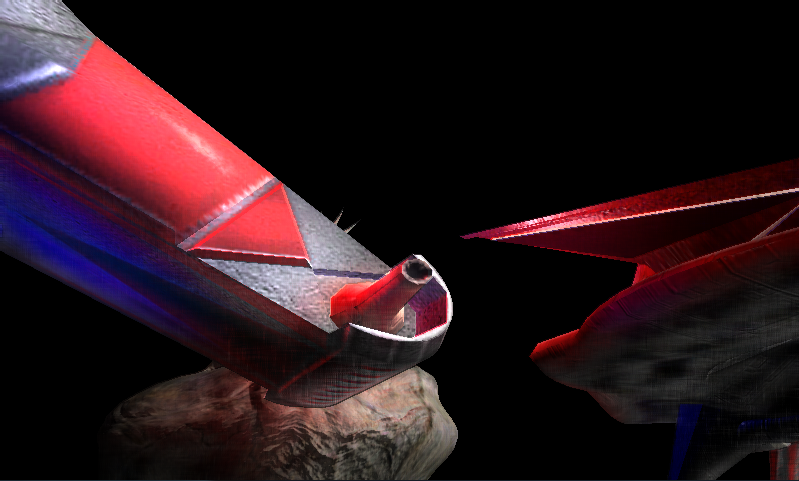

That's it. How many lines of code, 15? Of course this technique is not free from flaws and artifacts.

Just changing the radius too much leads to a lot of unwanted occlusion artifacts and since only

screen space is treated, only visible things have an effect.

I experiences some edge bleeding artifacts when depth values differ too much (?) that

even an additional blur pass was not able to mitigate (visible in the picutres below).

XNA 4.0 also only supports shader model 4.0, which means limited instruction count, which limits the quality of such an effect.

An additional blur pass is a must, though.